Augmented Reality in the Rhetoric and Symbolic Experience of Comic Book Images

Publicado en línea: 17 mar 2025

Recibido: 18 oct 2024

Aceptado: 07 feb 2025

DOI: https://doi.org/10.2478/amns-2025-0208

Palabras clave

© 2025 Yimo Sun, published by Sciendo

This work is licensed under the Creative Commons Attribution 4.0 International License.

Comics, an art form that has existed for a long time, has been underappreciated. Traditional printed comics are mainly based on paper media, and in terms of the way information is conveyed, the producer lets the audience feel the one-sided information output in the comics on their own through conceptualization and drawing methods [1-2]. Due to the popularization of computers and the Internet, digital comics have come into prominence, and interactive comics based on digital comics have also brought people an interactive experience in the form of comics [3-4]. Readers are no longer mere receivers of information, but participants who can control the process of story development. For the emergence of various kinds of interactive experiences nowadays, people have become accustomed to simple interactive behaviors, and it is difficult for comics to attract people’s attention even if interactive technology is added.

In recent years, augmented reality technology has finally been applied to the field of comics after continuous growth and improvement, which is a kind of sublimation for digital comics and can quickly attract the attention of comic readers [5-6]. Augmented reality technology is a derivative of virtual reality (VR) technology, which relies on artificial intelligence, digital sensing, computer graphics computing and other technologies to realize the final effect of augmented reality technology [7-10]. When a person wearing a special helmet or monitor equipped with a sensing device is moving, the computer will immediately find a reference point in the actual environment, and then after complex calculations, the precise computer virtual imaging or data will be transmitted very accurately to the real scene [11-14]. The application of augmented reality technology on interactive comics will make interactive comics more interactive and add benefits and fun to people’s lives with technology and creativity [15-16]. As comics, an art form that has been neglected for a long time, it is possible to use technological innovation to bring an unprecedented interactive experience to people who have not faced it squarely.

This paper analyzes the graphic narrative of comics as well as the symbolic language of comics, and proposes that icons and graphic rhetorical symbols play an important role in the expression of comics content. It also briefly outlines the types of graphic image rhetoric commonly used in comic texts. Point out the new forms of comics development in the digital era, including strip comics and dynamic interactive comics. Analyze the advantages of augmented reality-based interactive comics, and propose the improved tracking and registration algorithm based on natural feature point finding in augmented reality system and the global illumination model based on pixel enhancement algorithm, respectively. The performance of the improved registration tracking algorithm and the real-time light rendering algorithm are tested respectively. Combine the optimized light rendering technique with the dynamic comic cloud simulation to test the cloud image creation in dynamic comics by augmented reality technology. Investigate and statistic the user experience feeling of augmented reality-based comics.

Image narrative is an important way of expression in comics. As an art form, comics express storyline, characterization and emotion through image narrative.

Image narrative, is a narrative style that conveys information, emotions and opinions through visual elements. In a broad sense, graphic narrative has become a modern representation equivalent to visual culture, which is a basic language and expression of current culture. In a narrower sense, pictorial narratives refer to those that exist in the human cultural system and are carried by a variety of communication media. In particular, it is the narrative expression that takes image symbols such as film and television, painting, photography and advertisement as the basic ideological system. Image consists of visual and pictorial parts, and the visual part refers to photography, video, movie, television, and all kinds of advertisements and so on, which are made from real images. The pictorial part is a variety of images drawn by human beings, mainly including illustrations, comics, cartoon products, video games, etc., which together constitute today’s era of pictorial culture.

Comic symbol language includes:

Modeling symbols (character symbols, scene and prop symbols).

Textual symbols (dialog and dialog boxes, mimesis and onomatopoeia).

Icons and graphic rhetoric symbols (emoticons, speed lines and effect lines). Icons and pictorial rhetorical symbols play an important role in comics, as they can convey depth of emotion and information and help to enhance the reader’s reading experience and understanding.

Comics are a kind of graphic narrative, and images are the basic constituent units of visual text, equivalent to sentences in a language article. These “sentences” form paragraphs of an article through logical association, and sentences are connected with each other through certain rhetorical means to form a paragraph with stable structure and complete meaning. Similarly, image to image will also use a certain image rhetorical means to form a stable structure and complete meaning of the visual discourse [17-18].

The graphic rhetoric commonly used in cartoon texts contains such rhetorical patterns as imitation, metaphor, substitution, exaggeration, representation, anthropomorphism, symbolism, and quotation.

Mimicry refers to the rhetorical phenomenon of describing or recording the situation of things.

Anthropomorphism is an expressive method of endowing things with human thoughts, feelings, and activities.

In literature, simulacrum refers to portraying things that are unknowable and invisible in real life in an imaginative way as visible, audible, and vivid images. In visual images, the creation of manifestation requires bold imagination, and the objects, feelings, and situations thought of are expressed in a limited number of images, which gives people an unreal and novel visual experience. In cartoon works, the rhetorical practice of simulation is to let the audience form a figurative cognition of certain things and deepen their understanding.

In visual language, simile is manifested as seizing the similarity of two images, and imitating one thing as another. In the self-media popular science comics, the occurrence of image metaphor is often accompanied by the behavior of text metaphor, so as not to be confused by the replacement of images when reading.

In comics, the rhetorician, through imagination, subjective sensory metamorphosis of things in the objective world, exaggerates or highlights a part of the characteristics of things, in a way that breaks through the visual convention and the imaging of the physical form. Add fantasy and interesting effects to the picture to attract readers’ attention and deepen readers’ impression.

In the visual text of popular science comics, borrowing is mainly used in some concepts and events, because the concepts and events can not be expressed clearly by a simple image, so they are often replaced by an image that is logically related to the thing.

In popular science comics, visual images endowed with special symbolism appear in key positions in the picture, so that the concrete image shows abstract and profound meanings.

In recent years, the popularization of smartphones has led to the gradual transfer of people’s entertainment to cell phones. The presentation form of comics has also been gradually improved. Strip comics are mainly browsed on the mobile terminal, and their top-down layout is more suitable for mobile reading. Because of the continuity of its frame, the strip has its own unique narrative method, which has a great influence on the sub-scene of the comic. And compared with the traditional paper comics, the strips read on cell phones can make better use of people’s fragmented time, adapting to the reading habits of the modern society and bringing readers a novel reading experience.

With the continuous development of new media technology, the image narrative mode of comics is increasingly changing, and dynamic interactive comics are gradually bred as a new art mode. Dynamic comics combine the elements of comics and animation, and compared with traditional comics and strips, dynamic comics are characterized by audio-visual integration and are more interactive on mobile terminals.

Interactive comics in a broad sense is a form of digital comics built on top of dynamic digital comics, utilizing hypermedia technology and computer platforms as carriers, and combining sound and animation technology into interactive technology with traditional comics. The difference between the comics and traditional comics is that interactive comics take advantage of a specific digital platform to add animation or sound to a separate subplot or frame in a normal comic page, so that readers can freely control and participate in the process of the comic plot.

There are many types of interactive comics, the so-called augmented reality category, that is, no matter traditional comics or digital comics, can use augmented reality technology to realize the virtual imaging of the computer combined with the real environment. Let the readers feel the illusion that the characters in the comics leap out of the comics from the paper and come to the reality [19-20].

The application of augmented reality’s in interactive comics, for fans, augmented reality technology’s real-time interaction and the combination of real and virtual characteristics, so that readers experience more than just a comic book so simple, the harvest is a lot, because the AR technology brings three superimposed enhancement after the experience.

To realize the seamless integration of the virtual scene and the real scene, the augmented reality system must determine the position of the virtual object in the real scene as well as the real-time position and direction of the observer, and the tracking registration technology is the key technology to solve these problems.

This paper introduces the process of finding and matching natural feature points based on SURF algorithm, and realizes the finding and matching of natural feature points.

The SURF algorithm greatly improves the performance of the algorithm by combining the fast computational nature of the integral image, which implements the fast computation of a rectangular region. Its value represents the sum of the pixel values in the rectangular image region formed by pixel

For the input image

Since the feature point finding for SURF is done through Hessian matrix determinant and at the same time the integral image is utilized to reduce the computation time. The Hessian matrix for any point

Combining the above formulas, the Hessian determinant can be utilized for each pixel point in the image and the values obtained are used to locate the feature points.

In order to increase the speed of computation to reduce the amount of convolutional operations and to solve the above mentioned problems, an approximate representation of the Gaussian kernel function by the box filter kernel is proposed. Thus the Hessian determinant is utilized to approximate the Gaussian kernel function accurately as follows:

where

|| ||

The value of (

Feature point matching is to find the feature points of the pictures that need to be aligned, and compare the feature description information of each feature point to find out the matching feature points. The more popular method of feature point matching is to utilize the trace of Hessian matrix and the Euclidean distance similarity to cooperate, so as to speed up the matching speed. Namely:

The error rate of the matched feature points obtained after SURF detection is small, but the mismatch is objective. The current widely used Robust Random Sampling Consistent Algorithm (RANSAC) can be used to remove the mis-matched feature points.

The number of detected feature points is important for the efficiency and accuracy of image matching, which determines the system operation efficiency to a certain extent, so in this paper, the feature point extraction is carried out by preprocessing operations on the image first.

Usually, the histogram averaging of the gray scale map requires a mapping function to map the gray scale values to the corresponding intervals. Here, let the gray value of the original image at point (x,y) be t, the corresponding value after mapping be p, the gray level range of a map is [0,R-1], and the mapping function is Q(t). Usually as a mapping function Q(t) to satisfy two conditions:

When 0≤

Let the probability of occurrence of gray level

Where,

Therefore, the cumulative distribution function meets the above conditions and it is possible to achieve a uniform mapping of the grayscale of the original grayscale image to the homogenized image using this function.

In this paper, bilateral filtering is chosen for denoising because Gaussian filtering and other processing images will make the edges blurred. While bilateral filtering adds the pixel intensity change to retain the edge information, so that both the denoising effect and the edge is maintained.

The formula for bilateral filtering is expressed as:

where

In the actual augmented reality system operation, tracking failure still occurs, so it is necessary to re-feature point detection and matching of the video image to update the single response matrix, so as to re-register the tracking.The SURF feature detection algorithm is a feature point search for all the points of the target image, due to the whole map to find and match the time required is relatively high. For the target image is very large when the feature point search is especially time-consuming, can not well meet the real-time requirements. Therefore, this paper proposes a way to optimize feature point finding by combining the position obtained from the previous frame of tracking failure. If the tracking fails in the current frame, the position of the feature point in the 2D coordinates of the image is obtained using the previous frame. It can be estimated in this regard that the image to be matched is within a certain range of this feature point position. Since the augmented reality system only needs more than four feature points to obtain the single response matrix, thus obtaining all the information needed for registration, three-dimensional registration can be realized.

The integration of the virtual scene and the real scene needs to realize the lighting of the two to achieve the same effect, this paper researches the real-time lighting rendering technology, respectively introduces the basic theory of local lighting model and global lighting model, and simulates the flame effect by using the particle system. A pixel enhancement algorithm is proposed, which combines the pixel enhancement algorithm and the characteristics of the flame particle system to realize the effect of global illumination, i.e., the virtual fire light illuminates the surrounding real environment.

According to the characteristics of ambient light, the following formula model is usually used to approximate the simulation of ambient lighting. Namely:

Where

When drawing the scene, in addition to considering the effect of ambient light on the object, the role of other light sources should also be considered. According to Lambert’s law of cosines, the intensity of diffuse light reflected from an ideal diffuse reflector is proportional to the cosine of the angle between the incident light and the normal direction of the object surface, i.e.:

Where

Phong Specular Reflection Model, an empirical formula for calculating the light intensity of specularly reflected light, or simply the Phong model, i.e:

Where,

Combined with the classical Phong specular reflection model Lambert diffuse reflection model to get the following Phong model with only one point of light source irradiation, that is:

If there are multiple point light sources in the scene, then superimposing the illumination of each point light source gives a Phong model illuminated by multiple point light sources, ie:

where

In the global illumination model, the light intensity

Where

Using the stochastic dynamic properties of the particle system and the characteristics of the global illumination model objects reflecting light to illuminate other objects, the global illumination in the virtual scene and the real scene interaction of the lighting effect is completed.

Here, the virtual lighting is used to increase the intensity of the rendered scene. By pixel enhancement, we mean that we not only change the brightness value of the scene pixels, but also change the color value of the pixels accordingly. Using OpenGL’s programmable slice shader function, the currently stored lighting pixel values can be obtained. Then, using OpenGL’s alpha blending, the currently stored lighting pixel values are blended with the lighting pixel values of the virtual objects to form a new scene after blending.

Assuming

Where

However for all the pixel point values of the entire captured image, it is not necessary to get a new pixel value for each point, here, the original pixel color value can be used instead, i.e.:

Compare the standard equation for alpha blending:

where

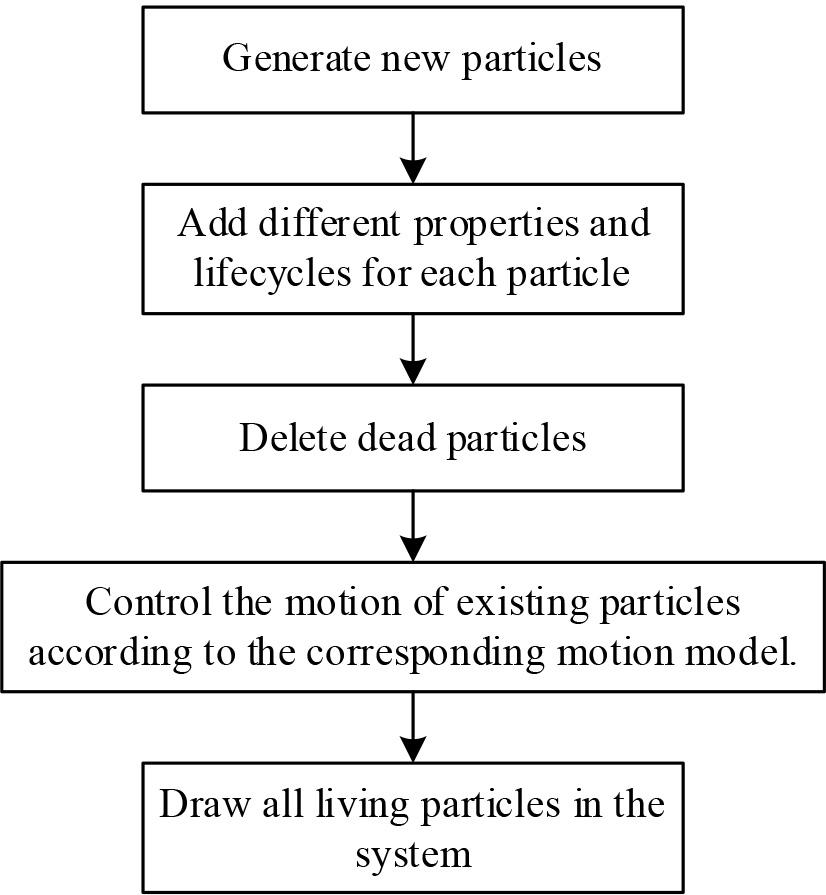

Flames are simulated using real-time light rendering, and the process of particle system simulation of flames is shown in Fig. 1. It can be summarized as generating new particles, adding different attributes and life cycles to each particle. Delete the dead particles and control the motion of the existing live particles according to the corresponding motion model. Plot all the surviving particles in the system.

Flame simulation process block diagram

Observe the results of the experiment, the virtual objects (flames) that can self-illuminate illuminate the real desktop, realizing the requirement of the global illumination model, i.e., the transfer of light energy between objects. Objects under the local illumination model can generate the corresponding ambient light, scattered light or specular light according to the given illumination. The application of global illumination in augmented reality systems meets the requirements of real-time light rendering between the virtual world and the real world and increases the realism of virtual objects.

The hardware environment used in this paper is a branded laptop with Intel(R) Core(TM) i7-8250UCPU@1.60GHz1.80 GHz processor, 8GB of RAM on board, and a 64-bit Win10 operating system. The implementation is based on Visual Studio 2023 development environment, the programming language is C++, and the image processing library of OpenCV version 4.5.5 and OpenGL graphics library are used. In order to verify the performance of the improved algorithm in this paper, video sequences from the public dataset OTB100 are selected for simulation experiments of target tracking and compared and analyzed with TLD algorithm, KCF algorithm and so on.

Comparison test of this paper’s algorithm, TLD algorithm and KCF algorithm on the test platform of OTB100, the accuracy and success rate of target tracking algorithm are shown in Fig. 2. According to the curve direction of the two images, it can be seen that the accuracy and success rate of this paper’s algorithm are in the front position compared with the other two algorithms. The target tracking algorithm based on improved feature points proposed in this paper achieves an accuracy of 0.821 and a success rate of 0.813 on the OTB100 test platform.

Accuracy and success rate of target tracking algorithm

The improved feature point-based target tracking algorithm is used to track the captured footage separately, and combined with the feature extraction and matching method to extract, describe and match the feature points. After that the camera’s position information is calculated. Finally, the OpenGL image library is utilized to render and draw the virtual model in the real environment and register it in the real environment. From the experimental results of virtual model tracking registration, it can be seen that the improved tracking registration in this paper has better accuracy and stability than the original algorithm’s tracking registration in the process of rotation transformation and occlusion transformation.

The software and hardware environments used in this experiment are the same as above. The experiments in this chapter will analyze the rendering performance under different voxel resolutions, mainly to verify the actual rendering effect and real-time performance of the global illumination rendering scheme proposed in this paper.

The rate at which an application displays an image is measured in frames per second (FPS), and only a little interactivity is possible when displaying one frame per second. At 6FPS, interactivity increases. And if it can display at 15 FPS, then it is a completely real-time application and the user is focused on the action and interaction.

The variation of FPS for different voxels is shown in Fig. 3, Figs. (a), (b) and (c) show the variation of FPS for 256 voxels, 512 voxels and 128 voxels, respectively.

FPS changes under different voxels

Figure (a) shows the frame rate variation of 256 resolution voxels, and it can be seen that the frame rate basically stays around 40, with good real-time performance, except for the frame rate drop at the beginning when there is a need for other operations such as loading scenes. At the beginning of the 54s, the surface roughness of the objects and scenes was adjusted from the lowest to the highest. The frame rate fluctuated during the process, but after the adjustment the frame rate became higher and increased to around 43.2.

Figure (b) shows the variation of frame rate for 512 resolution voxels, and it can be seen that the frame rate decreased significantly compared to the 256 resolution experiment, basically between 15 and 20. And the roughness was similarly adjusted from 50s onwards.

Figure (c) compared with the resolution of 256, the frame rate is not very obvious, mainly stabilized at about 42.7FPS. And the adjustment of roughness from 90s onwards did not improve the performance significantly, but rather caused fluctuations.

At the same time, the experiment will adjust the voxel resolution to check the rendering effect at different resolutions. This is done by keeping other parameters constant, using the same virtual object model, and adjusting only the voxel resolution. The experimental results reflect the results of scene roughness of 0 at 128, 256, and 512 resolutions. It can be seen that the results at 512 resolution are the most detailed. 128 resolution is the roughest and has some size expansion. 512 resolution has the problem of low frame rate and is not much better compared to 256 resolution. In addition, the effect of 128 resolution has some serious deformation, and the frame rate is about the same compared to 256 resolution. In this article, 256 resolution is the best setting.

The computer required for system operation is equipped with: AMD Athlon(tm)64 4000 processor, 1G RAM, AMD Radeon HD 7950 graphics card, Windows XP operating system, and VC++6.0 environment applying DirectX9.1 for system development.

A good allocation of work in the respective texture space is realized in time steps for the rendering of the cloud, and then combined with the previous contents of this paper, the application of the NS equations of each operator operates, and the results of the previous calculations will be used as the next calculations. The steps in the simulation in GPU at each discrete time step for the cloud are as follows:

Step1: Add the source density, external sources, illumination, vortex control forces, wind and buoyancy, resulting in an external force field, which will have an effect on the velocity field.

Step2: Represent the advective velocity field.

Step3: Read the texture of the velocity field and realize the function of solving the diffusion equation by Jacobi iterative algorithm, so as to know the latest state of the texture of the velocity field new u r, and modify the boundary velocity.

Step4: The situation of the Urnew dispersive flow velocity field is computed and the function of solving Poisson’s pressure equation 2.pw.=R is realized by Jacobi iterative algorithm, so as to know the state of the texture within the pressure field.

Step5: The main calculation of the equation new ur = ur ..p, correcting the velocity field, as well as modifying the velocity fast and slow located at the boundary positions, etc.

Step6: In accordance with the actual situation of the density source, through the cloud density function calculation, while generating the basic density field of the cloud particles, and then in accordance with the existing velocity field situation, to realize the role of the density field transmission.

Step7: The lighting situation is effectively calculated according to the above calculation method.

Step8: The organic combination of illumination texture and density texture is realized, and the color is applied to the final drawing color to use.

Step9: By moving the viewpoint, whether the error limit reaches the edge or not, if yes, then go to Step7. No, depending on the center distance of the cloud, draw the viewpoint choosing the appropriate model. Through experimentation, the setup time Jacobi iterative method can make the visual effect more perfect.

To realize the simulation operation, the GPU of the operating system needs to be accelerated. And using the Billboard technique of visual voxels, thousands of particles are rendered for thousands of thousands at a speed of 20 frames per second. When the number of clusters is 50, the number of particles in the range of [6000, 9000], when it meets the rendering frame in the range of [15, 50], it will be able to meet the conditions to carry out the drawing. The specific cloud rendering speed situation is shown in Table 1.

Cloud rendering speed

| The size of the network resolution | Size of texture resolution | How many particles | How much clouds | What is the number of frames |

|---|---|---|---|---|

| 64×64 | 64×64 | 8251 | 60 | 6 |

| 64×64 | 64×64 | 2765 | 62 | 12 |

| 64×64 | 64×64 | 3412 | 34 | 14 |

| 64×64 | 64×64 | 1766 | 32 | 13 |

| 64×64 | 64×64 | 1896 | 18 | 14 |

| 32×32 | 32×32 | 897 | 15 | 21 |

| 32×32 | 32×32 | 7849 | 53 | 14 |

| 32×32 | 32×32 | 3754 | 48 | 12 |

| 32×32 | 32×32 | 4506 | 26 | 20 |

| 32×32 | 32×32 | 1325 | 25 | 15 |

| 32×32 | 32×32 | 2346 | 19 | 17 |

Obviously, the main factors that have an important impact on cloud drawing include three aspects: whether the texture is accurate or not, the texture accuracy, the mesh situation and the number of particles.

When this calculation is performed, the accuracy of the texture and the network are roughly the same. When the size of the network resolution and the texture resolution are both 64 × 64, the number of particles is 8251, the number of clouds is 60, and the actual number of frames drawn is 6. The smaller the precision, the smaller the number of particles, and the larger the frame rate. According to the actual needs, you can adjust the precision and the number of particles.

This chapter according to the cloud density texture situation, as well as control parameter changes, verifies the dynamic change of the cloud, realizes the texture resolution, the number of particles and other different conditions for the drawing efficiency of the situation comparison. And provide different cloud effect map and dynamic effect map and so on. The global illumination model based on pixel enhancement algorithm and particle system proposed in this paper can successfully simulate the dynamic cloud image, which can be used as a hands-on technique for dynamic interactive comics.

The real experience brought by the viewing process of augmented reality technology-based comics can be directly and obviously seen from the subjective feelings of the comic users, so it is necessary to design a reasonable scale. A user questionnaire is conducted, and two groups of experimental results are finally obtained and compared to draw conclusions.

In this test, the “Interactive Augmented Reality Comic User Evaluation Scale” was designed, which was designed based on the Likert scale. The Likert scale is a scoring sum scale, and each statement has five responses: “strongly agree”, “agree”, “not necessarily”, “disagree”, and “strongly disagree”, which are scored as 5, 4, 3, 2, and 1 respectively. The sum of the total scores of the same topic can indicate what kind of attitude the person is trying to hold on the issue and how strong this attitude is.

In this experiment, 50 audiences of dynamic interactive class comics were randomly selected as subjects and divided into two groups of 25 people each. The scales were filled out separately, and the results of the user evaluation of interactive augmented reality-based comics are shown in Table 2.

User evaluation results for interactive augmented reality comics

| Options | User evaluation scale for interactive augmented reality comics | Average score |

|---|---|---|

| 1 | Augmented reality shows me a comfortable experience. | 4.66 |

| 2 | The images of flowers, sky and other images generated in real comics are real. | 4.51 |

| 3 | Augmented reality comics are very interactive. | 4.49 |

| 4 | I feel very natural to use augmented reality comics. | 4.23 |

| 5 | Augmented reality comics feedback to me is rich. | 4.75 |

| 6 | I prefer to enhance the form of real comics. | 4.81 |

There are a number of topics such as “augmented reality comics can bring me a comfortable experience”, “the images of flowers and the sky generated in augmented reality comics are very realistic”, “augmented reality comics are very interactive”, “I feel natural when I use the augmented reality comics system”, “augmented reality comics give me a lot of feedback”, and “I prefer the presentation of augmented reality comics”.

Regarding the results of the user experience survey on augmented reality-based comics, the users’ experience was generally good, with a score of 4.66 on the perception of using augmented reality-based comics, recognizing the expression of augmented reality technology in modifying the images of comics.

This paper summarizes the new forms of contemporary comic development and innovation (strip comics, dynamic interactive comics), proposes the use of augmented reality technology to innovate comic image expression, improves the tracking registration technology and real-time light rendering technology, and optimizes the reading experience of comic audiences.

The SURF algorithm is used as the basis for finding and matching natural feature points, and the feature point finding is optimized by combining with the tracking failures that occur in the operation of the actual augmented reality system. The target tracking algorithm based on the improved feature point finding has an outstanding performance on the test platform of OTB100, which is significantly higher than the TLD algorithm and KCF algorithm. Analyzing the FPS changes of the improved real-time lighting rendering technique under different voxel resolutions, the global lighting model based on the enhanced pixel algorithm achieves the best rendering results at 256 resolutions. Combining the improved tracking registration technique and the real-time light rendering technique, applied to the simulation of dynamic comic book cloud images, can realize the interactivity and visualization of comic book clouds, and innovate the presentation of rhetorical symbols in dynamic comic book images. Combined with the evaluation of the comic audience’s experience of augmented reality comics, the augmented reality technology proposed in this paper can further realize the seamless integration of the virtual scene and the real scene, and bring the comic audience a real-time interactive and virtual combined reading experience.

Subject information: Scientific Research Project of Jilin Provincial Education Department: The study of image rhetoric and symbol expression of ideological and political cartoons (No.: JJKH20231351SK).